On October 25, 2024, we hosted the second session in our Ethical AI Talk Series, featuring Dr. Jonathan Beever, PhD, from the UCF Center for Ethics at the University of Central Florida. His talk, titled “AI as a Problem of Public Health Ethics,” explored the intersection of artificial intelligence and public health, shedding light on the ethical implications AI poses in society.

Key Ethical Issues in AI

Dr. Beever outlined several pressing ethical issues that arise from AI technologies, including:

- Manipulation and Simulation: The ways AI can shape human perception and actions, often without their informed consent.

- Various Forms of Harm: Dignitary, informational, physical, and environmental harms that AI systems can inadvertently (or intentionally) inflict.

- Emotional Displacement: The potential for AI to alter human emotions and social interactions.

- Privacy and Data Ownership: The challenges in maintaining individual data rights in a world increasingly dominated by AI data processing.

These ethical challenges are illustrated by real-world examples such as the misuse of AI to create “deepfake” media and other manipulative applications, highlighting the urgency for stronger ethical guidelines.

Evolution of GPT Models and Ethical Considerations

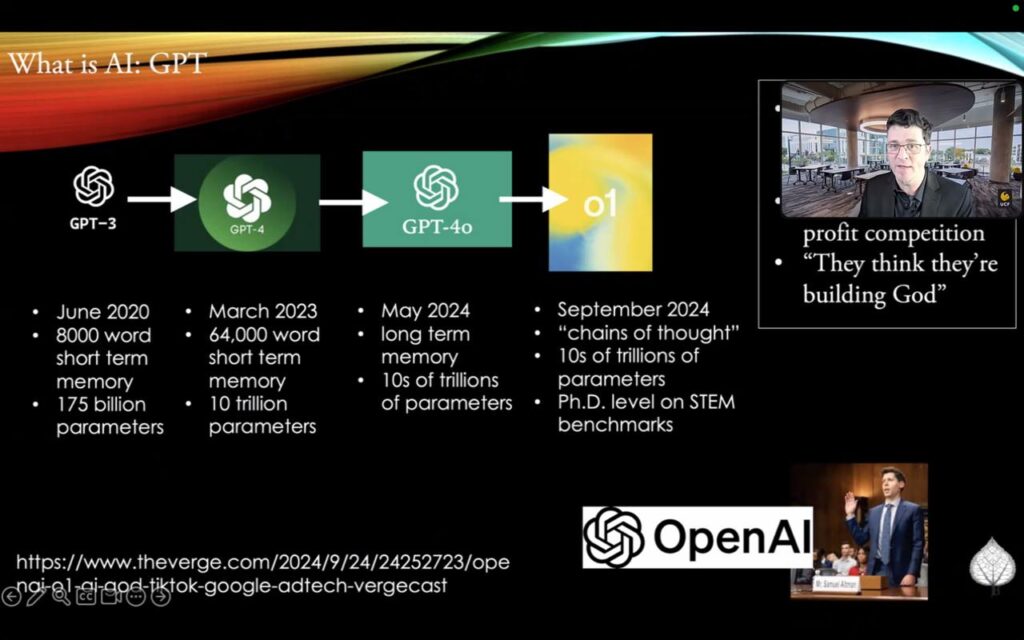

Dr. Beever traced the evolution of OpenAI’s GPT models, from GPT-3 in June 2020 to the recently launched advanced models with “chains of thought” capabilities. The increasing complexity of these models and their expanding scope raise profound ethical questions. He pointed out the competitive nature of AI development, quoting an insider sentiment, “They think they’re building God,” underscoring the high stakes involved.

AI Ethics as Public Health Ethics

Dr. Beever proposed viewing AI ethics through the lens of public health, highlighting how ethical principles such as Autonomy, Non-Maleficence, Beneficence, and Explicability can serve as a foundation for evaluating AI’s societal impact. This approach aligns with principles from the Belmont Report and other bioethical frameworks, advocating for a responsible and ethical integration of AI into public health.

Key Ethical Frameworks and Standards

Dr. Beever shared a table categorizing key ethical principles, cases, and human rights concepts from prominent entities like UNESCO, Google, IBM, NIST, Microsoft, and the Department of Defense (DOD). This framework emphasizes the importance of diverse ethical principles such Accountability, Transparency, Privacy, and Safety, which are essential for ensuring that AI development serves the public interest.

The lecture concluded with a call for interdisciplinary collaboration to navigate the complex ethical landscape AI presents, especially in areas impacting public health and societal well-being.

This engaging session provided valuable insights for professionals and students in the field of AI ethics, reinforcing the need for ongoing dialogue and rigorous ethical standards in AI development.